Transfer learning & Multi-task learning

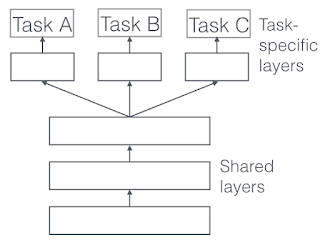

In transfer learning, you learn from a sequential process, i.e. learn from task A and transfer it to task B. However, in multi-task learning, you learn from multiple tasks simultaneously. In transfer learning, learn the NN for a big task. Then, for a smaller task just retrain the weights of the last layer only (or last 1-2 layers). You could also retrain all the parameters of the NN and in that case, it is called as pre-training because you are initializing the weights of the NN from a pre-trained model. When you are updating the weights of the model, it is also called as fine-tuning. A couple of ways in which fine tuning works is: Truncate the last layer of the NN and replace it with the new layer to learn the new output. Use a smaller learning rate to train the network. Freeze the weights of the first few layers of the NN. When does transfer learning make sense ? You have a lot of data from the task that you are originally learning from and small amount of data for the